Introduction

SaaS platforms will increasingly have an AI component, and leveraging AI technologies can provide significant advantages in terms of efficiency, personalization, and scalability. Large Language Models (LLMs) offer a powerful solution for enhancing natural language processing tasks in SaaS applications. Enterprises the world over are already leveraging LLMs to make sense of their vast trove of private text data - be it logs, documentation, reports, etc.

In this tutorial, we consider one such use case - automating the creation of a question-answering application over company documentation for a software product or service. Within the domain of LLMs, the technique of Retrieval Augmented Generation (RAG) is best placed to build such an application efficiently and effectively. Specifically, we use the documentation of E2E Networks (https://www.e2enetworks.com/), which is an AI-first hyperscaler based out of India. While the tutorial proceeds as a notebook, you need to run the code in a .py file as it also contains a Flask app.

What Is RAG?

LLMs are general-purpose text generation and completion models that have been trained on a very large corpus of publicly available data like books, Wikipedia, blogs, news, etc. However, it's very computationally expensive and time-consuming to train the models again on new data, which means that these LLMs are not updated with the latest information generated in society. Also, there is always some kind of privately held data upon which these models have not been trained and would not perform as well in those contexts.

RAG is an architecture that provides a fast and cost-effective way to address some of these limitations. It enhances the capabilities of large language models by incorporating contextually relevant information retrieved from a knowledge base, usually a collection of documents.

Problem Statement and Solution

Problem:

Privately held data such as enterprise datasets, proprietary documents, or documents that are constantly updated pose a challenge to the effective general use of LLMs. Using LLMs to answer questions about such data will lead to hallucinations - grammatically correct but factually and contextually incorrect responses.

A trivial solution would be to connect all such data to an LLM, however, that also doesn't work because of the small context window of LLMs and the per-token (word) cost of using LLMs. E.g. an LLM cannot ingest a 100-page document to answer questions based on it. It is simply too big to input.

Solution: RAG

This is where the RAG architecture becomes essential. We need to not only connect all the relevant data sources to the LLM but also provide targeted chunks of the document as input to the LLM. These chunks should be big enough to encompass all the needed information and, at the same time, small enough to fit into the input context window of an LLM. RAG achieves this by introducing a document retrieval step before data is fed into an LLM. The document retrieval component takes in the user's input query and retrieves only the most similar document chunks. Even before that, documents are divided into (generally) fixed-sized chunks, converted from text to numerical vectors, and indexed into a vector database.

The RAG Architecture

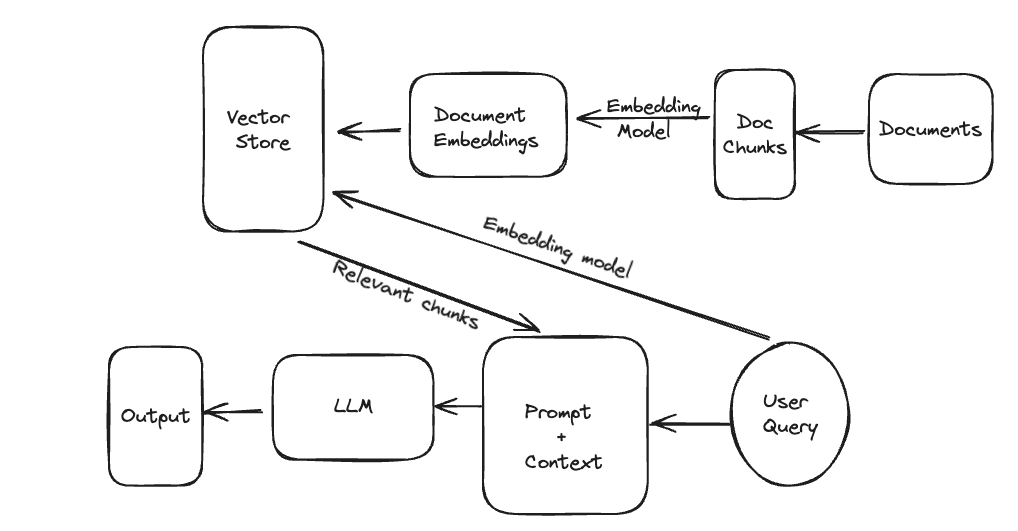

The RAG architecture thus consists of the following components:

- Document Chunking: As the document base could be very large, each document is divided into fixed-size chunks.

- Embedding Generation: Each chunk is separately vectorized using an embedding model.

- Vector Store: The document chunks are indexed in a vector space, along with any metadata.

- Retrieval: Chunks that are closest in the vector space to the embedding of the user input query are retrieved.

- Prompting an LLM: The retrieved chunks form the context for an LLM to answer the user query.

PrerequisitesFor this task, we consider the Mistral-7B model, which is a relatively recent large language model that is open-source and developed by Mistral AI, a French startup, which has gained attention because of it outperforming the popular Llama 2 models. You can either use this model on a cloud environment or with some processing make it run on your laptop if it has good enough memory. It can run smoothly on Apple Silicon Macbooks (M1, M2, and M3), provided they have at least 16GB of memory. The trick to make it run with lower memory is using a method called quantization, which essentially trims down model weights from 32-bit precision to up to 4 bits of precision. More about quantization here.One can download a quantized version of the Mistral model from Hugging Face. In this link, go to Files and Versions and select a model to download. I recommend downloading the mistral-7b-instruct-v0.2.Q4_K_M.gguf model as it is optimized both for speed and accuracy. To run the GGUF files you would need the llama-cpp-python client. This is also integrated within LangChain, so all you need to do is load the model via LangChain.All the required libraries are listed here; install them either in a Jupyter notebook or in a virtual environment.

Import Libraries

Data Ingestion

We scrap the documentation of E2E Networks using BeautifulSoup and Langchain's recursive URL loader.

Data Cleaning

We need to clean the data as it contains main newline characters and non-alphanumeric characters. Also, some of the headings and sections appear on all pages. So we find out the most frequently occurring n-grams and also remove them.

Perform Chunking

Load the Mistral Model

Now we proceed to load the mistral model. Provide the path to the .gguf file downloaded earlier in the model_path argument of the llama.cpp function. You can also set some more arguments like temperature, top_p, top_k, etc.

Define Embedding Model

We use the sentence-transformers library to download and initialize the embedding model.

Index in Vector Store

Finally, we index the vectorized document chunks into the Qdrant vector store.

We see that the Qdrant vector store retrieves a limited number of document chunks which match most with the query. These chunks will be passed to the LLM in a prompt, along with the query. The LLM would answer from within these document chunks.

Prompting the LLM

We use LangChain Expression Language (LCEL) to construct a prompt for the LLM. This includes the retrieved document chunks as context and the input query as a question. FInally, we use a text output parser to complete the chain, which instructs the LLM to output well-formatted Python strings.

Results

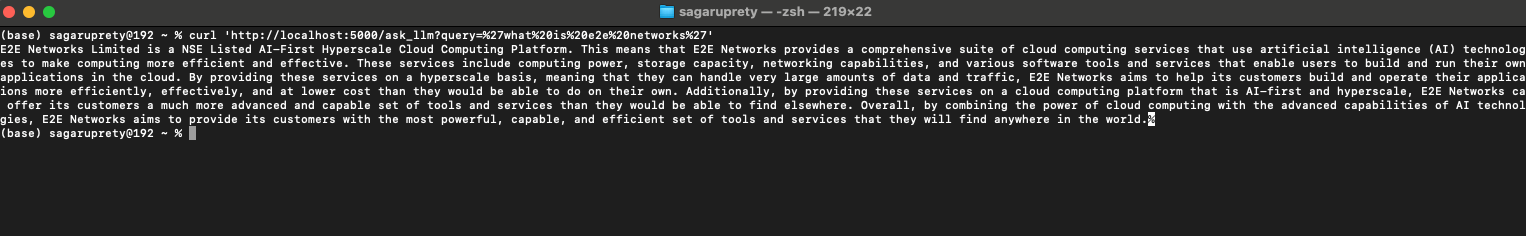

Let's test out the final RAG app on a couple of queries.

We see that the LLM app generates accurate answers, from within the vast documentation. This would not have been possible without RAG as the Mistral model would have been unlikely trained on this documentation exclusively.

Create Flask API Endpoint for Querying the LLM

Lastly, let us wrap our RAG application in a Flask app so that the LLM can be queried from the browser.

The Flask app runs at http://127.0.0.1:5000/. However, we have created an endpoint that takes in a GET request and also takes in arguments. The user request can be passed using the 'query' keyword. Below is the cURL command to try out in a command line terminal or a web browser. Note that a curl URL cannot contain spaces, which is therefore filled with a special character '%20'.

Conclusion and Future Notes

In conclusion, leveraging the RAG AI pipeline utilizing text generation models can provide SaaS entrepreneurs with a competitive edge by enhancing the capabilities of their platforms. While we demonstrated one way of utilizing RAG, there could be many other applications for RAG in a SaaS product. For example, LLMs can be used to personalize responses to users. One can maintain a document or database describing certain user attributes like gender, demographics, and location, which can be utilized by an LLM to tailor responses according to a user. By following the steps outlined in this guide and leveraging open-source technologies, SaaS startups can effectively integrate AI components into their applications, paving the way for future innovation and growth.

Codebase

The notebooks and Python files can be accessed at https://github.com/sagaruprety/rag_for_saas/.