Introduction

Bhashini is an AI-based translation tool which is designed to break the barrier between the diverse languages that people speak across India. It uses Artificial Intelligence, Natural Language Processing (NLP) and, most importantly, it is crowdsourced which helps developers to gather data to teach it different languages. It enables people to speak in their own language while talking to speakers of other languages.

Today, it has become essential to have language models that are specific to India's many languages given the country's linguistic diversity. In this context, Bhashini is the ultimate solution; it is specifically made to understand, process, and produce text in a variety of Indian languages, including Bengali, Hindi, Tamil, Telugu, Marathi, Gujarati, Kannada, Malayalam, Punjabi, and more. Its inception was motivated by the aim of bridging the gap between state-of-the-art language models and the complex linguistic fabric that characterizes India. Bhashini combines transformative technology and linguistic diversity by utilizing sophisticated deep learning architectures tailored to the peculiarities of Indian languages. This allows for contextual understanding, sophisticated language processing, and domain adaptability in a variety of industries. In the field of Indian language processing, its multilingualism, contextual understanding, and domain adaptability represent a significant advancement towards inclusivity, accessibility, and cultural relevance. It also establishes a new standard for language models that are customized to local linguistic subtleties and looks forward to a time when technology coexists peacefully with India's rich linguistic diversity.

Understanding Bhashini

Bhashini, which comes from the Sanskrit term ‘bhasha,’ which means ‘language,’ is an advanced Indian Language Model designed to understand, produce, and handle text in different Indian languages.

Key Features

- Multilingual Capability: Many Indian languages, such as Hindi, Bengali, Tamil, Telugu, Marathi, Gujarati, Kannada, Malayalam, Punjabi, and others, are supported by Bhashini. Its capacity to be multilingual is essential since it allows it to accommodate a variety of language preferences.

- Contextual Understanding: By utilizing sophisticated deep learning architectures, Bhashini is able to understand the subtleties of context in Indian languages, taking into account grammatical structures, idiomatic phrases, and linguistic nuances unique to each language.

- Fine-tuned for Indian Languages: Bhashini, in contrast to general language models, is especially tailored for the peculiarities of Indian languages, including a variety of scripts, phonetic variances, and distinct syntactic rules that are exclusive to each language.

- Domain Adaptability: Due to the model's versatility across several domains—including healthcare, finance, education, and more—it may be tailored to meet business demands and improve language processing in specialized fields.

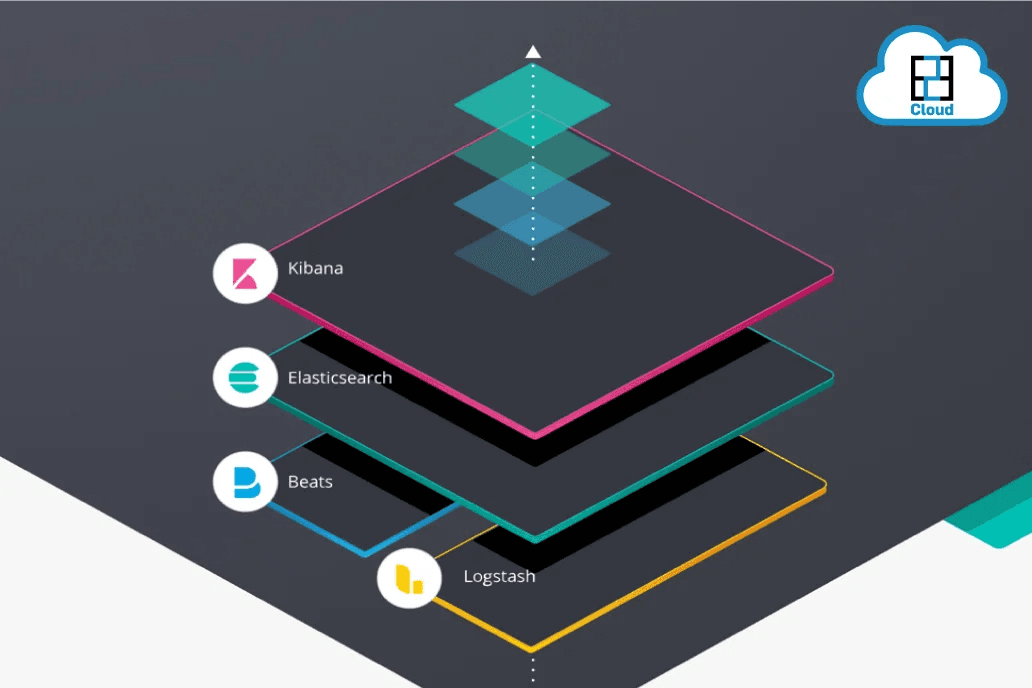

Technical Architecture

The intricate foundation of Bhashini's technological architecture is based on cutting-edge deep-learning techniques specifically designed for the intricacies of Indian languages. Fundamentally, Bhashini uses transformer-based neural networks, taking cues from well-known designs such as BERT, GPT, or XLNet. Bhashini's design, in contrast to generic models, is carefully tailored and adjusted to fit the nuances of Indian languages, guaranteeing its effectiveness in a variety of linguistic contexts. The training data, a sizable corpus of varied text sources including books, news articles, social media, and numerous digital platforms in several Indian languages, is what makes the model strong. Bhashini's training is based on this large dataset, which helps it understand the subtleties of language usage, idiomatic phrases, grammatical structures, and contextual changes unique to each language.

Bhashini uses specific tokenization and preprocessing methods to manage the variety of scripts and character sets present in Indian languages. These methods enable effective processing and representation inside the model by segmenting the input text into tokens or subword units specific to each language. The training phase is an important step when the model adjusts to the linguistic nuances and subtleties found in various Indian languages. It entails painstaking fine-tuning and optimization. Bhashini's performance and adaptability are further improved by methods like domain-specific fine-tuning and transfer learning, which guarantee its relevance and application in specialized industries. Bhashini's technological architecture combines state-of-the-art deep learning principles with customized data pretreatment and optimization approaches to produce a model that can analyze and generate text across India's heterogeneous linguistic environment with effectiveness. Its domain-specific optimizations, flexibility and methods for fine-tuning highlight its potential as a flexible and essential instrument for thorough language processing in the complex Indian linguistic ecology.

Training Data

Bhashini's effectiveness is credited to its comprehensive training on enormous text data corpora that span a variety of areas, sources, and genres in every Indian language. This corpus ensures a wide and representative range of English usage by including textual data from news stories, books, social media, and other digital sources.

Preprocessing and Tokenization

Because Indian languages are complicated and include a wide variety of scripts and character sets, Bhashini uses specific tokenization and preprocessing methods. By dividing the input text into tokens or subword units unique to each language, these approaches enable efficient processing and representation.

Fine-Tuning and Optimization

To improve its performance across many languages and domains, the model goes through a rigorous process of fine-tuning and optimization. The model is more resilient and adaptive because of techniques like domain-specific fine-tuning and transfer learning.

Applications of Bhashini

The versatility of Bhashini extends to various practical applications:

- Language Understanding and Generation: By enabling a variety of NLP applications, Bhashini makes activities like text interpretation, sentiment analysis, language translation, and text production easier in several Indian languages.

- Content Creation and Localization: Bhashini is used by companies and content makers to create and localise content across linguistic areas while maintaining language correctness and cultural relevance.

- Customer Interaction and Support: Companies may use Bhashini to improve customer service by using chatbots and customer care systems that speak the customers' native tongues.

- Education and Accessibility: By offering information in regional languages, Bhashini contributes to improved accessibility and diversity in learning contexts, hence boosting educational resources.

Future Prospects and Challenges

While Bhashini stands as a remarkable advancement in Indian language processing, several challenges and opportunities lie ahead.

Challenges

- Data Quality and Quantity: The availability of high-quality and diverse training data across all Indian languages remains a challenge, impacting the model's performance and coverage.

- Resource Intensiveness: Training and maintaining language models like Bhashini require significant computational resources and expertise, posing a barrier to scalability.

Opportunities

- Enhanced Language Coverage: Continued efforts in expanding the coverage and quality of training data will further enhance Bhashini's capabilities across Indian languages.

- Research and Innovation: Ongoing research in NLP and deep learning will fuel innovations in language models, paving the way for improved versions of Bhashini with enhanced functionalities.

Conclusion

Finally, Bhashini represents a quantum leap in the direction of recognising and utilising the linguistic variety that exists throughout India. It can become a vital tool for a wide range of applications, including customer service, education, and content development, because of its capacity to understand contextual subtleties, colloquial phrases, and domain-specific variants within each language. It also helps to democratise access to information and services in regional languages.

References

- Sarkar, A.K., Basu, T., Roy, R., Basu, J., Tongbram, M., Chanu, Y.J. and Dwivedi, P., 2023. ‘Study of Various End-to-End Keyword Spotting Systems on the Bengali Language Under Low-Resource Condition’. In Speech and Computer: 25th International Conference, SPECOM 2023, Dharwad, India, November 29–December 2, 2023, Proceedings, Part II (Vol. 14339, p. 114). Springer Nature.

- Dixit, R., 2023. ‘A Comprehensive Review of Transformer models and Their Implementation in Machine Translation Specifically on Indian Regional Languages.’ Available at SSRN 4449023.

- https://static.pib.gov.in/WriteReadData/specificdocs/documents/2022/aug/doc202282696201.pdf

- Pathak, D.P., ‘Chatbot System in Indian Languages: A Survey’.