A Note on Open-Source Models

Open-source LLMs have gained massive recognition for their accessibility and flexibility. Their ability to provide complete control over the source code, data, and deployment as well as offer an active community for support and development have given them an advantage over closed-source LLMs. This makes them ideal for a wide range of users, from researchers and educators to developers and businesses as they offer a range of customizations targeted towards each domain.

Meta has been a significant contributor to the Large Language Model (LLM) space, starting with the launch of Llama. This model marked Meta's entry into the competition with key players by demonstrating the potential of open-source pre-trained models. The subsequent release of Llama-2 further solidified Meta's position, triggering an explosion of open-source LLMs that were more efficient in written tasks, code, and problem-solving. The latest in this series is Llama-3, which continues Meta's commitment to democratizing powerful AI models.

LLaMA 3: An Overview

Llama 3 is the latest contribution of Meta to the open-source LLM space. It shows considerable improvements in benchmarks vis a vis Llama 2 and other open-source LLMs like Gemma. Trained on a 7 times larger dataset than Llama 2, with 4 times more code, it shows considerably better performance in tasks like question-answering, coding, creative writing, extraction, inhabiting a character/persona, open question-answering, reasoning, rewriting, and summarization.

Image from Meta AI

Llama 3 is available in 2 versions: Llama3-8B and Llama3-70B. The model was trained on a whopping 15 trillion tokens and the context length was doubled from 4K to 8K for both the 8B and 70B models. Meta has also used grouped query attention for both models. Llama 3 maintains the decoder-only transformer design that was successful in its earlier versions. It includes a tokenizer that can manage 128,256 tokens.

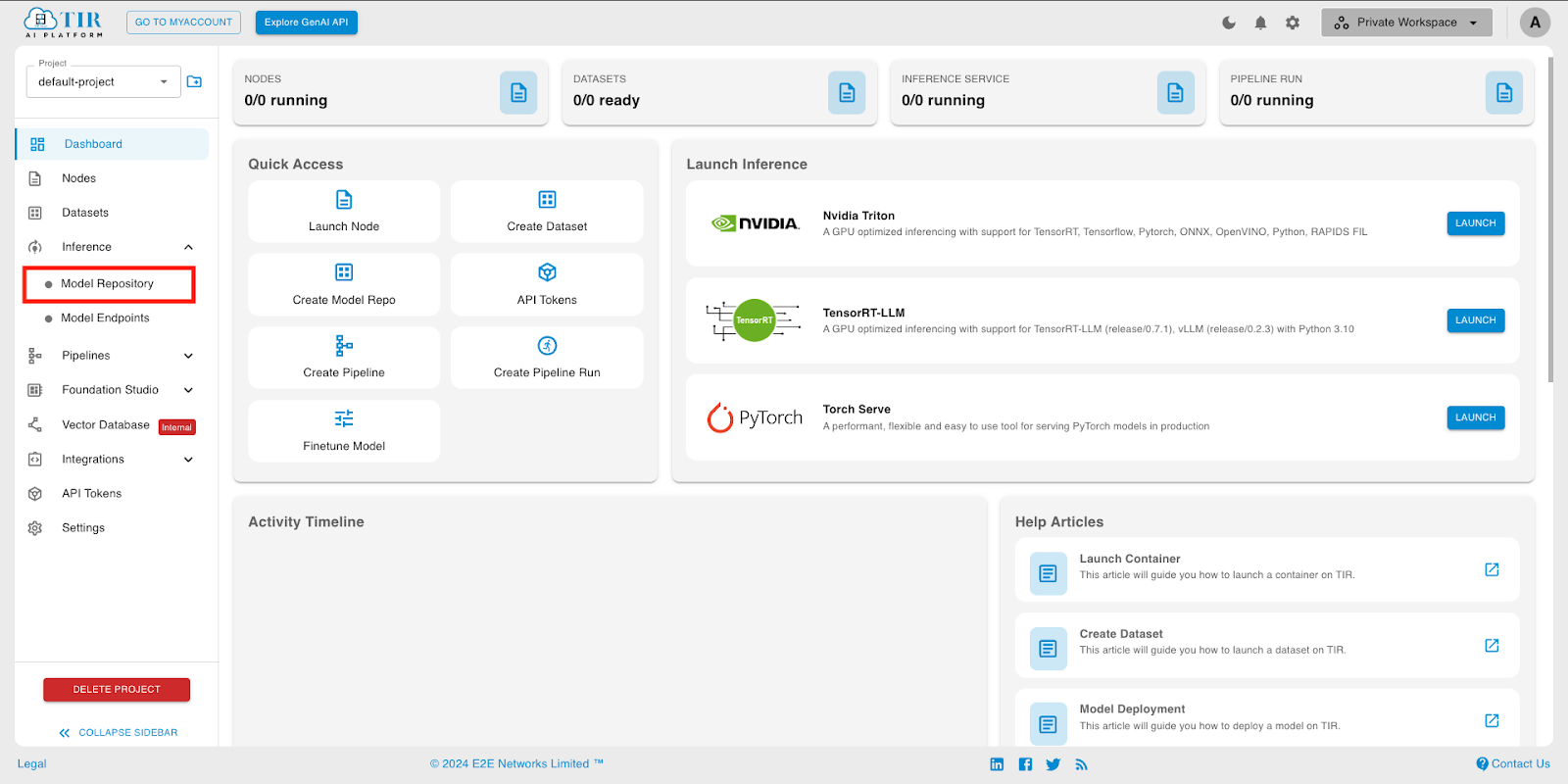

In this tutorial, we will explore fine-tuning, deployment, and inference with Llama 3 on the TIR AI Platform by E2E Networks.

Fine-Tuning, Deployment, and Inference with Llama 3 on the TIR AI Platform

Prerequisites

The tutorial will be covered using the model and functions from the Hugging Face ecosystem. The model will be fetched from Hugging Face models. Launch a Jupyter Notebook on the E2E TIR AI Platform or connect a node via SSH, and login using your Hugging Face credentials.

!huggingface-cli login

Install the required libraries for fine-tuning.

%pip install -U bitsandbytes

%pip install -U transformers

%pip install -U peft

%pip install -U accelerate

%pip install -U trl

%pip install -U wandb

%pip install torch

import os,torch

if torch.cuda.get_device_capability()[0] >= 8:

!pip install -qqq flash-attn

attn_implementation = "flash_attention_2"

torch_dtype = torch.bfloat16

else:

attn_implementation = "eager"

torch_dtype = torch.float16

Set up wandb and login with API key.

import wandb

wandb.login(key = API_KEY)

Llama 3 is a gated model in Hugging Face. Make sure you have requested and received access to the model from the repo page before proceeding to use it.

Fine-Tuning

Let’s start fine-tuning the model for finance queries. Import the required libraries.

from transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig,HfArgumentParser,TrainingArguments,pipeline, logging

from peft import LoraConfig, PeftModel, prepare_model_for_kbit_training, get_peft_model

from datasets import load_dataset

from trl import SFTTrainer

Dataset

For this tutorial, I will be using the finance alpaca dataset. Load the dataset from HuggingFace.

dataset_name = "gbharti/finance-alpaca"

dataset = load_dataset(dataset_name, split = "train")

Import the tokenizer from the model repo

base_model = "meta-llama/Meta-Llama-3-8B"

tokenizer = AutoTokenizer.from_pretrained(base_model, trust_remote_code=True)

tokenizer.padding_side = 'right'

tokenizer.pad_token = tokenizer.eos_token

tokenizer.add_eos_token = True

Adding a prompt template helps with consistency, clarity, and more control over the model. Here is one that we can use. Load the dataset and make necessary changes in the training part.

prompt = """Below is an instruction that describes a finance-related task, paired with an input that provides further context. Write a response that appropriately completes the request.

Instruction:

{}

Input:

{}

Response:

{}"""

EOS_TOKEN = tokenizer.eos_token # Must add EOS_TOKEN

def formatting_prompts_func(examples):

instructions = examples["instruction"]

inputs = examples["input"]

outputs = examples["output"]

texts = []

for instruction, input, output in zip(instructions, inputs, outputs):

# Must add EOS_TOKEN, otherwise your generation will go on forever!

text = prompt.format(instruction, input, output) + EOS_TOKEN

texts.append(text)

return { "text" : texts, }

pass

dataset = dataset.map(formatting_prompts_func, batched = True,)

EOS_TOKEN is the end-of-string token used by the tokenizer. This token is appended to each formatted string to indicate the end of the text.

Preparing Llama 3

I will be using PEFT (PyTorch Efficient Fine-Tuning) and LoRA (Low-Rank Adapters) to fine-tune Llama 3 for efficient and effective model training. PEFT provides efficient fine-tuning capabilities for large language models like Llama 3. LoRA reduces computational requirements and memory footprint, making the fine-tuning process more manageable.

Set up a BitsAndBytesConfig for 4-bit quantization and load the pre-trained model with this configuration.

bnb_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4",

bnb_4bit_compute_dtype=torch_dtype,

bnb_4bit_use_double_quant=True,

)

model = AutoModelForCausalLM.from_pretrained(

base_model,

quantization_config=bnb_config,

device_map="auto",

attn_implementation=attn_implementation

)

model.config.use_cache = True

model.config.pretraining_tp = 1

model.gradient_checkpointing_enable()

model = prepare_model_for_kbit_training(model)

peft_config = LoraConfig(

r=16,

lora_alpha=32,

lora_dropout=0.05,

bias="none",

task_type="CAUSAL_LM",

target_modules=['up_proj', 'down_proj', 'gate_proj', 'k_proj', 'q_proj', 'v_proj', 'o_proj']

)

model = get_peft_model(model, peft_config)

new_model = "Llama-3-Finance"

Finally, define LoraConfig for Low-Rank Adapters (LoRA) and apply this configuration to the model using the get_peft_model.

Model Training

Train the model and save the weights. You can access the weights later for deployment.

training_arguments = TrainingArguments(

output_dir="./results",

num_train_epochs=10,

per_device_train_batch_size=4,

gradient_accumulation_steps=1,

optim="paged_adamw_32bit",

save_steps=25,

logging_steps=25,

learning_rate=2e-4,

weight_decay=0.001,

fp16=False,

bf16=False,

max_grad_norm=0.3,

max_steps=-1,

warmup_ratio=0.03,

group_by_length=True,

lr_scheduler_type="constant",

report_to="wandb"

)

trainer = SFTTrainer(

model=model,

train_dataset=dataset,

peft_config=peft_config,

max_seq_length = 2048,

dataset_text_field="text",

tokenizer=tokenizer,

args=training_arguments,

packing= False,

dataset_num_proc = 2,

)

trainer.train()

model = trainer.model.merge_and_un

Creating Model Repository

To create the inference endpoint, we will need to move the model to a model repository on TIR.

- Go to TIR Dashboard.

- Choose a project.

- Go to Model Repository section.

- Click on Create Model.

- Enter a model name.

- Select Model Type as Custom for the custom container as we are deploying a fine-tuned model. Click on CREATE.

- You will now see details of the EOS (E2E Object Storage) bucket created for this model.

- EOS offers an API that is compatible with S3 for uploading and downloading data. In this tutorial, we'll be utilizing the MinIO CLI.

- Set up MinIO CLI using the commands on the following page.

- Use the commands to go to the folder containing the model snapshots. Then upload the model files that were saved after the training phase to the model repository.

If you cannot find the model files in the cache, try saving the fine-tuned LoRA adapter, reloading the base model, and merging the weights again.

Note: Use the correct model repository and folder names. The above shown is just a demo.

You can see and confirm the file contents in the model repository after uploading.

Deployment & Inference

Kudos! You are almost there. Model weights are uploaded to the TIR Model Repository. Now it is ready to use in an endpoint and serve API requests.

- From the API tokens section, create an API token if you don't already have one. This will be used in your requests.

- Go to Model Endpoints section.

- Create a new Endpoint.

- Choose LLAMA-3-8B-IT model card.

- Choose Link with Model Repository and select the Model Repo from the dropdown.

- Set the required settings.

- Pick a suitable GPU plan of your choice. It is recommended to select GPUs V100 and above for Llama 3 inference.

- Add a custom endpoint name and set environment variables.

The endpoint will take a few minutes to be ready.

For testing the inference, find the Python and cURL requests in the API requests section of your endpoint. Replace the API_KEY.

cURL:

curl --location 'https://infer.e2enetworks.net/project/p-2945/endpoint/is-1396/v2/models/ensemble/generate' --header 'Content-Type: application/json' --header 'Authorization: Bearer $API_KEY -X POST --data '{

"text_input": "What's the difference between Term and Whole Life insurance?",

"max_tokens": 1000

}'

Python:

import requests

import json

url = "https://infer.e2enetworks.net/project/p-2945/endpoint/is-1396/v2/models/ensemble/generate"

payload = json.dumps({

"text_input": "What's the difference between Term and Whole Life insurance?",

"max_tokens": 1000

})

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer $API_KEY'

}

response = requests.request("POST", url, headers=headers, data=payload)

response.text

Here is a sample response:

["<|begin_of_text|>Below is an instruction that describes a finance related task, paired with an input that provides further context. Write a response that appropriately completes the request.\n\n### Instruction:\nWhat's the difference between Term and Whole Life insurance?\n\n### Input:\n\n\n### Response:\nTerm life insurance is a type of life insurance that provides coverage for the life of the insured. Whole life insurance is a type of life insurance that provides coverage for the life of the insured. Term life insurance is a type of life insurance that provides coverage for the life of the insured. Whole life insurance is a type of"]

Wrapping Up

You have learned how to fine-tune and deploy Llama 3 seamlessly on the TIR AI platform of E2E Networks. I recommend exploring other model containers, such as vision models, to further understand the simplicity of this platform for model deployment and inference. By exploring the pipelines and foundation studio sections, you’ll gain more insights into no-code training and fine-tuning generative AI models. Hope this tutorial helped you to understand better the steps required in AI model deployment and inference.

References

https://ai.meta.com/blog/meta-llama-3/