This article deals with the Falcon-40B and a step-by-step guide on how it can be implemented on E2E Cloud.

The AI industry was initially focused on small scale applications and pipelines that were less computationally complex and time-intensive. Natural Language Processing (NLP) is one branch of AI that combines computer science and linguistics. The recent developments in computing power have helped the AI community to train models at scale, including large language models (LLM) trained on a large corpus of data. With the increase in the amount of information in the open data warehouse, it has become possible to easily access and manipulate data using LLMs.

What is Falcon 40B?

Falcon 40-B is an open source LLM released by the Technology Innovation Institute (TII) in the UAE. It holds the topmost position among the world's LLMs, and is also the world's top-ranked royalty-free LLM. Trained on 40 billion parameters and a dataset of 1 trillion tokens, Falcon 40-B earned its name from these impressive numbers. Additionally, there is a smaller version known as the Falcon 7-B.

The Leaderboard ( Source )

Challenges Falcon-40B Solves

Falcon 40-B was specifically developed by the TII to tackle several challenges, including:

-

The need for an open-source large language model.

-

The need for a versatile model that can be trained and tested on different languages, despite being primarily trained on data from the Middle East.

Falcon 40-B is openly accessible to all for research and academic purposes, making it a valuable contribution to the academic community.

Capabilities

It is capable of performing a lot of operations listed below but not restricted to it:

- Natural Language Generation

Falcon 40-B is capable of creating a wide range of contextually accurate content. It can generate high-quality natural language outputs like blog articles, persuasive cold emails and also perform text translations.

- Natural language understanding

With its robust language processing capabilities, Falcon 40-B is more context-aware. The LLM can evaluate and understand entity relationships and draw relevant insights from the input.

- Code Generation

The Falcon 40-B can help developers by automatically creating code snippets for specific tasks, saving time and effort in the development process.

- Data Analysis

The extensive language modeling skills of the Falcon 40-B allow it to obtain significant insights from complex information. Users can gain a better understanding through Falcon-40 B's capability of identifying patterns, trends, and correlations.

- Machine Learning

The Falcon 40-B is well-suited for various machine learning tasks, such as training and fine-tuning models on multiple datasets, permitting academics and practitioners to explore and develop the area of machine learning.

Latest Developments

As a royalty-free solution, Falcon 40-B has gained widespread adoption across government and private sectors, with notable emphasis on its utilization by the UAE Government. Academicians have actively experimented with the model, conducting rigorous tests on leading cloud platforms such as E2E, to explore its capabilities and potential applications.

Model and Architecture

The architecture of Falcon 40-B is inspired by GPT-3 but the main difference is that it uses:

- FlashAttention

- Positional Embeddings, and

- Decoder Blocks

The FlashAttention approach

It is faster, memory efficient, and exact, i.e., there is no approximation. Below is the architecture of FlashAttention:

By using SRAM (Static Random Memory) , which is way faster and way smaller than Graphic Processing unit (GPU) or HBM (High-Bandwidth Memory), we can attain a very high speed in the training process. The computation is done block by block which is also called tiling. This saves a lot of memory and we will obtain a high-quality model.

Positional Embeddings

Positional Embeddings help the model to learn long range dependencies. It is mostly used for machine translation.

Decoder Blocks

Decoder Blocks are then used to decode the message in this transformer based architecture.

Block Structure of Falcon 40-B

The above diagram shows the block diagram of Falcon 40-B. The inputs are the Query (Q), Key(K), and Value(V). Q and K are taken together, linear masking is applied and V is multiplied to it to produce a linear output. The attention is computed block by block which speeds up the training process and saves memory. The model can be run on a single A100 with 80GB of RAM.

Why is Falcon 40-B so powerful?

Falcon 40-B is trained on a very huge corpora of word embeddings. The training data, sourced from the "refined web" and Reddit conversations, is of exceptionally high quality. The refined web dataset is built upon the vast archives of CommonCrawl, which have been collecting petabytes of data since 2008. The sheer magnitude of this data contributes to Falcon 40-B's unparalleled strength and effectiveness.

_page-0001.jpg)

_page-0002.jpg)

Comparison of Falcon 40B with other LLM Models

Launching Falcon-40B on E2E Cloud

To successfully launch Falcon-40B on the E2E Cloud platform, follow the step-by-step guide below.

- Generate your set of SSH keys in your local system using the following command:

ssh-keygen

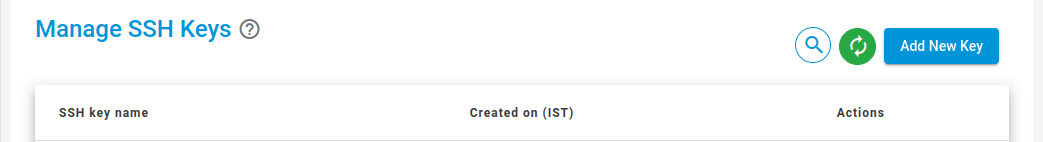

A public and private key will be generated for your local system. Never ever share your private key with anyone. Add the public ssh key on E2E Cloud under Settings > SSH Keys > Add New Key. like this:

- After you have added the key, log in to the E2E network , create a from your local network via SSH:

$ ssh username@ip_address

Enter the password when prompted to.

It's always a good practice to update and upgrade the machine.

$ sudo apt update & upgrade

Install lfs.

$ git lfs install

And then clone the Falcon 40-B repository:

$ git clone https://huggingface.co/tiiuae/falcon-40b

Also, download the dataset:

$ git clone https://huggingface.co/datasets/tiiuae/falcon-refinedweb

Install the necessary packages:

$ sudo apt -y install -qq aria2

$ pip install -q -U torch torchvision torchaudio torchtext torchdata --extra-index-url https://download.pytorch.org/

$ pip install -q -U bitsandbytes sentencepiece fsspec gradio einops xformers

$ pip install -q -U git+https://github.com/huggingface/transformers.git

$ pip install -q -U git+https://github.com/huggingface/accelerate.git

To get started with the model, create a python script, say “script.py”:

$ touch script.py

Edit the “script.py” file and add the following to get started with the training process:

from transformers import AutoTokenizer, AutoModelForCausalLM

import transformers

import torch

model = "tiiuae/falcon-40b"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline("text-generation", model=model, tokenizer=tokenizer,

torch_dtype=torch.bfloat16, trust_remote_code=True, device_map="auto", )

sequences = pipeline( "Girafatron is obsessed with giraffes, the most

glorious animal on the face of this Earth. Giraftron believes all other

animals are irrelevant when compared to the glorious majesty of the

giraffe.\nDaniel: Hello, Girafatron!\nGirafatron:", max_length=200,

do_sample=True, top_k=10, num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id, )

for seq in sequences:

print(f"Result:{" "}{seq["generated_text"]}"

Run:

$ python script.py

This will load the model weights and will generate text.

Conclusion

Falcon 40-B has opened up new opportunities for academics and researchers to examine and use online data for their own developments. The distribution of the free dataset by TII is an admirable example of open-source community action. As researchers, we are excited about the possibilities that this model may offer in the near future. In addition, the user-friendly E2E Networks cloud platform offers an affordable option for training large-scale models. We're excited about using our own data to train LLMs at scale on the E2E cloud infrastructure.