Introduction

E-commerce businesses increasingly adopt chatbots to improve customer service and engagement, especially in sectors where understanding customer preferences and buying behaviors is essential.

Thanks to advancements in AI, these chatbots can now offer personalized, context-based responses, enhancing the overall shopping experience. Another major development in this field is the emergence of advanced text-to-speech (TTS) systems like Parler TTS, which allow chatbots to respond to natural, human-like voices, bringing a more personal feel to automated interactions.

In this article, we will guide you through the process of building an AI-powered e-commerce voice chatbot that responds to user queries in human-like voice. You can use the pattern explained below to bring voice capabilities to your customer query responses.

About Parler TTS - Text-to-Speech Model

Parler TTS is a text-to-speech system that can generate high-quality, natural-sounding speech from text input using advanced neural network models. It supports multiple languages and voices, making it perfect for applications like virtual assistants and interactive voice systems.

Integrating Parler TTS into a chatbot enhances user engagement by providing human-like voice responses, making interactions more natural and accessible, especially for users who prefer listening or are unable to read text. This can be particularly beneficial in customer service applications where clear verbal communication is crucial, or in situations where users are busy and cannot read responses. Also, its voice customization options allow for a more personalized user experience.

How to Use Parler TTS

Before we get started, here is how you can use the Parler TTS model.

First, import the libraries.

import torch

from parler_tts import ParlerTTSForConditionalGeneration

from transformers import AutoTokenizer

import soundfile as sf

Then set the device as GPU if available.

device = "cuda:0" if torch.cuda.is_available() else "cpu"

Then initialize the model and tokenizer.

tts_model = ParlerTTSForConditionalGeneration.from_pretrained("parler-tts/parler-tts-mini-v1").to(device)

tokenizer = AutoTokenizer.from_pretrained("parler-tts/parler-tts-mini-v1")

Give the prompt (input text) and description of the speaker to generate the output.

prompt = "In the high-stakes world of tech interviews, candidates often feel like theyre the ones being scrutinized, their every word, project, and decision analyzed to the nth degree. But heres a truth that often goes unspoken: companies hold most of the power in these scenarios, and they can obfuscate their culture during interviews, either intentionally or unintentionally. It’s easy for a company to present a polished front, but as a candidate, you need to dig deeper to uncover what working there is really like."

description = "A male speaker ,with the speaker's voice sounding clear and very close up."

You can now generate audio outputs, and save to the device.

input_ids = tokenizer(description, return_tensors="pt").input_ids.to(device)

prompt_input_ids = tokenizer(prompt, return_tensors="pt").input_ids.to(device)

generation = tts_model.generate(input_ids=input_ids, prompt_input_ids=prompt_input_ids)

audio_arr = generation.cpu().numpy().squeeze()

sf.write("parler_tts_out.wav", audio_arr, tts_model.config.sampling_rate)

If you are using IPython, you can also play output.

import IPython

IPython.display.Audio("parler_tts_out.wav")

Integrating Parler TTS to Generate Voice Responses from Customer Data

We will now showcase how to use the TTS model to create voice responses from customer data.

To build this, we will use a bunch of technologies in addition to the Parler TTS described above. Here’s a short description of each of them.

Llama 3.1 LLM: Llama 3.1, developed by Meta, is available in three sizes: 8 billion, 70 billion, and 405 billion parameters. It features an impressive context length of 128,000 tokens and supports eight languages. It includes advanced tool usage capabilities for tasks like long-form summarization and coding assistance. Utilizing Grouped-Query Attention (GQA) for efficient context management, Llama 3.1 has been rigorously evaluated across over 150 benchmark datasets and shows improved performance over closed models like GPT-4 variants. We will use the Llama 3.1-70B model in our tutorial.

Vector Embeddings Generation: Vector embeddings are numerical representations of unstructured data. To generate them, embedding models are used, which convert textual unstructured data into a numerical vector format while capturing the features of the data. The resulting embeddings are dense, high-dimensional vectors that capture the semantic meaning and contextual relationships of the original data.

Ollama: Ollama is a lightweight and extensible framework that simplifies the process of running large language models (LLMs) on cloud GPU servers. It provides a simple API for creating, running, and managing language models, as well as a library of pre-built models that can be easily integrated into applications. Ollama supports a range of models, including popular ones like Llama 3.1, Llama 2, Mistral, Dolphin Phi, Neural Chat, and more.

In addition to the above, we will use a vector database (Qdrant) to store and search through vector embeddings. We will also use LangChain to stitch the whole workflow together.

Prerequisites

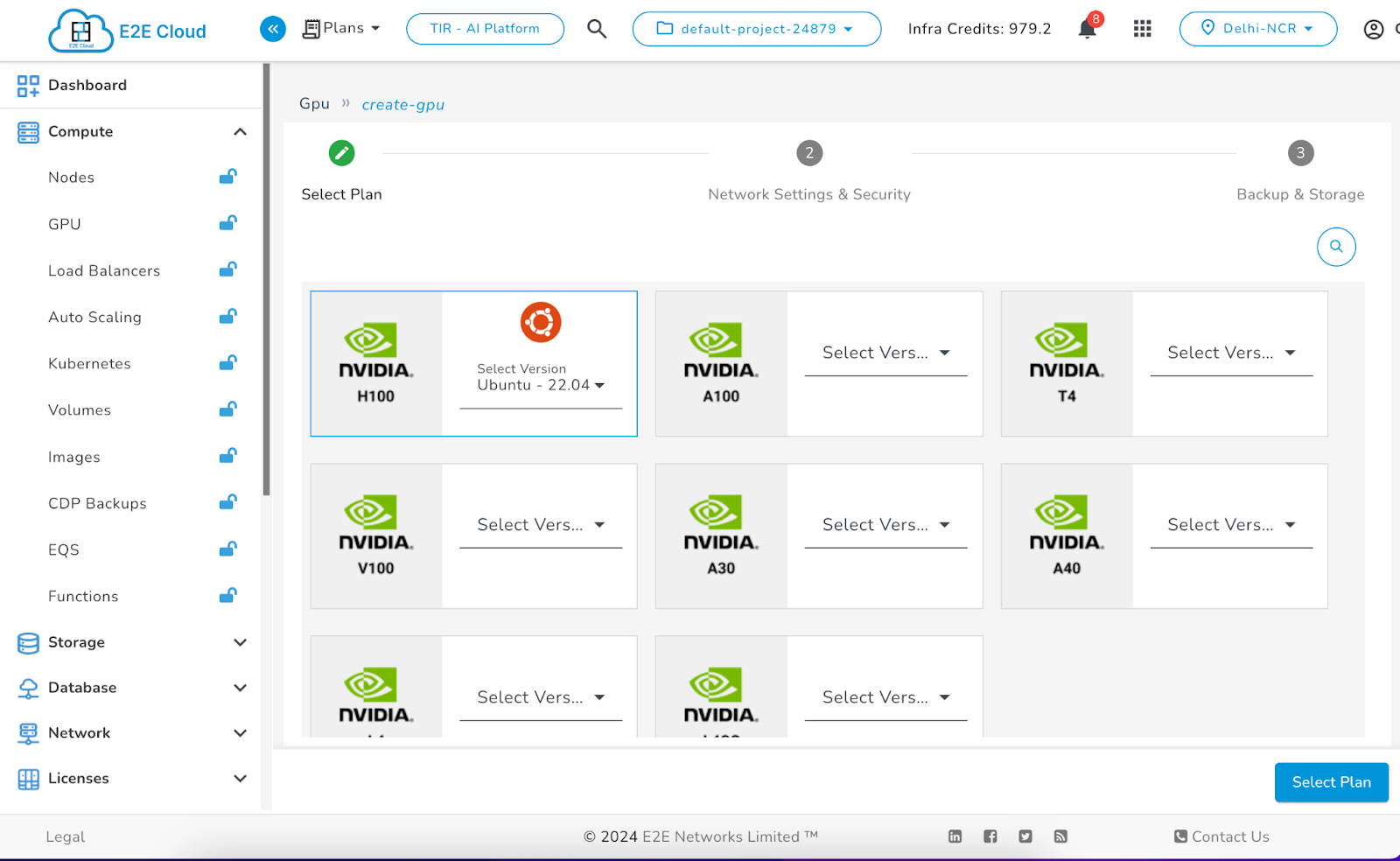

Once you have launched your cloud GPU node, you can then SSH into the machine, and install and launch Jupyter Lab.

Alternatively, you can also switch to TIR - AI Development Platform on Myaccount, and launch a Jupyter Notebook there. You will get a choice to pick the GPU node, and you can pick accordingly. This is the recommended approach.

We will assume that you have a Jupyter Notebook running either on a cloud GPU node or via TIR.

Before running the code, let’s install the libraries that would be required:

! pip install sentence-transformers

! pip install qdrant_client langchain-community

! pip install gradio

Step 1: Loading and Processing Customer Data

We start by loading customer data from a CSV file and processing it to create separate customer profiles that will later be used to generate responses. The code for processing may vary according to the data provided.

import pandas as pd

file_path = 'data.csv'

df = pd.read_csv(file_path,encoding='ISO-8859-1')

def create_profile(customer_name, df):

customer_data = df[df['CustomerName'] == customer_name]

customer_info = df[df['CustomerName'] == customer_name].iloc[0]

customer_id = customer_info['CustomerID']

transactions = [

f"On {row['InvoiceDate']} , {customer_name} bought {row['Quantity']} {row['Description']} for price: {row['UnitPrice']} (Stock Code: {row['StockCode']}, InvoiceNo:{row['InvoiceNo']}) in {row['Country']}"

for _, row in customer_data.iterrows()

]

history_text = "\n".join(transactions)

profile=f"Name={customer_name},Customer Id={customer_id}\n"+history_text

return history_text

def create_all_profiles(df):

profiles = []

unique_names = df['CustomerName'].unique()

for name in unique_names:

profiles.append(create_profile(name, df))

return profiles

chunks=create_all_profiles(df)

Step 2: Encoding the Chunks Using a Pre-Trained Embedding Model

You can use a pre-trained model like sentence-transformers/all-mpnet-base-v2 for turning chunks into embeddings by using the sentence-transformers library:

model = SentenceTransformer("sentence-transformers/all-mpnet-base-v2")

vectors = model.encode(chunks)

Step 3: Storing the Embeddings in Vector Store

Now, you can store these embeddings in a database like Qdrant, which can also be used for semantic searches. The choice of the vector database is yours.

from qdrant_client import QdrantClient

from qdrant_client.models import Distance, VectorParams

client = QdrantClient(":memory:")

client.recreate_collection(

collection_name="customer-profiles",

vectors_config=VectorParams(size=len(vectors[0]), distance=Distance.COSINE),

)

client.upload_collection(

collection_name="customer-profiles",

ids=[i for i in range(len(chunks))],

vectors=vectors,

)

Step 4: Implementing the Context Generation Function

We will now create a function that will fetch the context based on the query vector. It will use a similarity search to find document chunks closest to the query:

def make_context(question):

ques_vector = model.encode(question)

result = client.query_points(

collection_name="customer-profiles",

query=ques_vector,

)

sim_ids = []

for i in result.points:

sim_ids.append(i.id)

context = ""

for i in sim_ids[0:5]:

context+=chunks[i]

return context

Step 5: Generating Responses Using LLM

We can now use Ollama to access open-source multilingual models like Llama 3.1-70B to generate meaningful responses based on context – in this case, a customer profile provided.

For that, first, install Ollama.

$ curl -fsSL https://ollama.com/install.sh | sh

$ ollama pull llama3.1:70b

$ ollama run llama3.1:70b

Now, you can use it in your code.

import ollama

def respond(question):

stream = ollama.chat(

model="llama3.1:70b",

messages=[{'role':'user','content': f'This is the question asked by user {question} and the context given is {make_context(question)} answer this question based on the context provided in 1 to 2 lines '}],

)

return stream['message']['content']

Step 6: Adding Text-to-Speech Functionality Using Parler TTS

For adding interaction, we integrate with the Parler text-to-speech (TTS) model, which will convert the generated text response into an audio format with a certain description provided.

import torch

from parler_tts import ParlerTTSForConditionalGeneration

from transformers import AutoTokenizer

import soundfile as sf

device = "cuda:0" if torch.cuda.is_available() else "cpu"

tts_model = ParlerTTSForConditionalGeneration.from_pretrained("parler-tts/parler-tts-mini-v1").to(device)

tokenizer = AutoTokenizer.from_pretrained("parler-tts/parler-tts-mini-v1")

def text_to_speech_fun(prompt):

description = "A male speaker ,with the speaker's voice sounding clear and very close up."

input_ids = tokenizer(description, return_tensors="pt").input_ids.to(device)

prompt_input_ids = tokenizer(prompt, return_tensors="pt").input_ids.to(device)

generation = tts_model.generate(input_ids=input_ids, prompt_input_ids=prompt_input_ids)

audio_arr = generation.cpu().numpy().squeeze()

sf.write("parler_tts_out.wav", audio_arr, tts_model.config.sampling_rate)

Step 7: Integrating with the Gradio Interface

Finally, we can use Gradio to create a simple web interface to test the chatbot. The interface will display the text response and play the corresponding audio as well.

import gradio as gr

def generate_response(user_query):

bot_response = respond(user_query)

text_to_speech_fun(bot_response)

audio_file = "parler_tts_out.wav"

html_response = f"""

#### Answer:

{bot_response}

"""

return html_response, audio_file

with gr.Blocks() as demo:

gr.Markdown(

"""

## E-commerce Chatbot

"""

)

gr.Markdown(

"""

Hello! How may i help you.

"""

)

gr.Markdown("

---

")

user_query = gr.Textbox(placeholder="what does Dan Daniels like to buy?")

output = gr.HTML()

audio_output = gr.Audio()

user_query.submit(fn=generate_response, inputs=user_query, outputs=[output, audio_output])

demo.launch(share=True)

Output:

Conclusion

By following this guide, you can create an e-commerce chatbot that can understand customer queries, retrieve relevant information from customer profiles, and respond with both text and voice. This project combines powerful tools like LangChain, Qdrant, Parler (TTS), and Gradio to deliver a highly interactive and intelligent user experience.