Our Aim in This Tutorial

In this guide we’ll demonstrate how to fine-tune Llama 3 on E2E Network's TIR platform.

TIR provides a ready-made no-code solution to deploying, fine-tuning, and inferencing LLMs. It allows users to upload their datasets into containers that can later be used for building AI workflows. Other features of TIR include pipelines, Gen AI APIs, and vector databases, all of which can be launched in a straightforward fashion using their UI.

Overall TIR aims to simplify the management of computationally intensive operations performed by data scientists and AI/ML researchers.

Let’s Start Fine-Tuning

Let’s begin fine-tuning Llama 3. We’ll be using the hind_encorp dataset. This dataset contains English Text Snippets and their translation in Hindi. Here’s how it looks like:

{ "en": "They had justified their educational policy of concentrating on the education of a small number of upper and middle-class people with the argument that the new education would gradually ' filter down ' from above .", "hi": "कम संख़्या वाले उच्च एवं मध्यम श्रेणी के लोगों तक ही अपनी शिक्षा नीति को केंद्रित करने को इस तर्क के साथ न्यायसंगत बताया कि नयी शिक्षा Zक्रमश : ऊपर से नीचे की ओर छनते हुए जायेगी ." }

We’ll use this dataset to enhance the Hindi translation abilities of our Llama 3 Model.

The TIR platform expects the dataset to be in JSONL format. So, first, let’s convert our dataset into that format.

import pandas as pd

import json

from datasets import load_dataset

data = load_dataset('hind_encorp', split= 'train')

df = data.to_pandas()

Example DataFrame creation or loading a DataFrame - not provided in your snippet

Assuming df is your DataFrame and already loaded

Sample 10% of the rows randomly

sampled_df = df.sample(frac=0.1) # Adjust frac to change the fraction

Function to extract values from the dictionary in the 'translation' column

def extract_values(row):

return {'input': row['translation']['en'], 'output': row['translation']['hi']}

Apply the function to create a new column for JSONL format in the sampled DataFrame

sampled_df['jsonl_format'] = sampled_df.apply(extract_values, axis=1)

Write to JSONL file

with open('output.jsonl', 'w') as file:

for item in sampled_df['jsonl_format']:

json.dump(item, file)

file.write('\n')

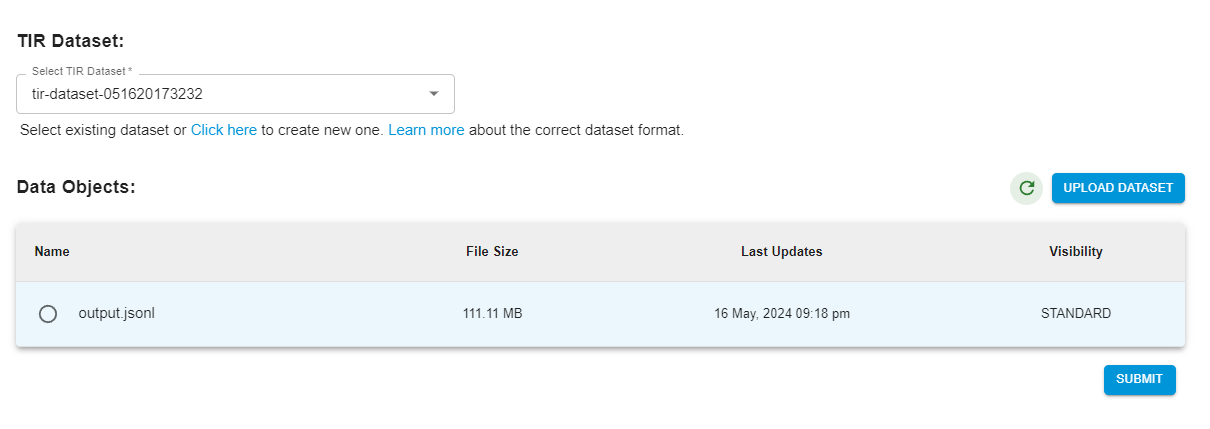

Now we will upload this data into an EOS bucket. On the TIR platform click on Dataset and then Create Dataset.

Once you’ve created a dataset, you can use the following Minio CLI commands to upload the JSONL file into an EOS bucket.

Now go to Foundation Studio and click on Fine Tune Models. Select Llama-3-8B as your model, and create a Hugging Face Integration token.

Select Dataset type as custom, and then choose a dataset. The ones that we uploaded earlier will be available in an EOS bucket.

Now change the prompt configuration so as to align with the dataset.

In the next section, you can tune the hyperparameter configuration for the fine-tuning job. We’ll go with the default values:

Additionally, you can set up Wandb integration if you wish to monitor the fine-tuning process.

Select the GPU node you wish to use for running the fine-tuning.

With that set, you can launch the process.

Inference

Once the fine-tuning is complete, your model will be ready to be deployed in the Fine Tune Models section of the Foundation Studio.

Go ahead and deploy the model. The deployed model will be available in the Model Endpoints section of the Inference tab.

Click on the API request to receive your API key for the endpoint.

You can query the endpoint in the following manner:

import requests

import json

api_key = 'YOUR_API_KEY'

query = 'YOUR_QUERY'

payload = json.dumps({

"instances": [

{

"text": f'{query}'

}

]

})

headers = {

'Content-Type': 'application/json',

'Authorization': 'Bearer ' + f'{api_key}'

}

response = requests.request("POST", url, headers=headers, data=payload)

response.text

Let’s try out a query:

“Translate the following English Text into Hindi: Experience the state-of-the-art performance of Llama 3, an openly accessible model that excels at language nuances, contextual understanding, and complex tasks like translation and dialogue generation. With enhanced scalability and performance, Llama 3 can handle multi-step tasks effortlessly, while our refined post-training processes significantly lower false refusal rates, improve response alignment, and boost diversity in model answers. Additionally, it drastically elevates capabilities like reasoning, code generation, and instruction following. Build the future of AI with Llama 3.”

Results:

'{"predictions":["Here is the translation of the provided English text into Hindi:

"लामा 3 के अत्याधुनिक प्रदर्शन का अनुभव करें, जो एक खुलकर सुलभ मॉडल है जो भाषा की बारीकियों, संदर्भिक समझ और अनुवाद तथा संवाद सृजन जैसे जटिल कार्यों में उत्कृष्ट है। बेहतर स्केलेबिलिटी और प्रदर्शन के साथ, लामा 3 आसानी से मल्टी-स्टेप कार्यों को संभाल सकता है, जबकि हमारी परिष्कृत पोस्ट-ट्रेनिंग प्रक्रियाएं गलत इनकार दरों को काफी कम करती हैं, प्रतिक्रिया संरेखण में सुधार करती हैं, और मॉडल उत्तरों में विविधता बढ़ाती हैं। इसके अतिरिक्त, यह तर्क करने की क्षमता, कोड जनरेशन, और निर्देशों का पालन करने जैसी क्षमताओं को भी उल्लेखनीय रूप से ऊंचा उठाता है। लामा 3 के साथ एआई के भविष्य का निर्माण करें।"

"]}'

Conclusion

In this blog, we have effectively tested how to create a Hindi-language chatbot on TIR. Go ahead and try it for yourself.