Coding assistants are emerging as a path-breaking technology, driving developer productivity by helping with code completion and debugging errors. According to a survey conducted by GitHub, 92% of US-based developers are currently using AI coding tools both in and outside of work, and the same upward trend is going to catch up in other territories especially the global South. The market for AI coding assistants is projected to grow at a CAGR of 41.2% from 2021 to 2028, reaching $2.3 billion by 2028.

Software companies or IT services companies looking to harness the productivity gains offered by coding copilots have two options: to use a proprietary copilot platform, or deploy their own stack. In this article, we will explain why the latter option is a better choice for deploying AI coding assistants; we will also offer a guide that explains how to deploy it for your dev teams.

Why Host AI Coding Assistants on Cloud Infra

One of the key advantages of hosted coding assistants is cost-effectiveness, especially when considering the needs of large development teams. When hundreds of developers require access to a coding assistant, opting for an open-source stack that is hosted on a company’s cloud can be far more economical compared to subscribing to proprietary copilot softwares. By deploying AI models on their own, organizations can avoid the recurring costs associated with per-user licenses or usage-based pricing models.

Moreover, when companies host their own coding assistants, they get greater control and customization options. Development teams can fine-tune the AI models to align with their specific coding conventions, frameworks, and project requirements.

Another significant benefit of hosting coding assistants in one’s cloud infra is data privacy and security. When dealing with sensitive or proprietary codebases and company’s internal IP, organizations may prefer to keep their data within their own infrastructure rather than relying on third-party services. This is especially true when compliance comes into play.

Also, they can facilitate seamless integration with existing development environments and workflows. Developers can access the assistant's features directly within their preferred code editors or integrated development environments (IDEs), eliminating the need to switch between different tools or platforms.

In terms of performance, hosting coding assistants in one’s cloud infrastructure can offer faster response times, scalability, and reduced latency compared to proprietary platforms. By running the AI models on their infrastructure, developers can benefit from quicker code completions and suggestions.

Steps to Hosting Coding Assistant Based on CodeLlama on E2E’s Cloud GPUs

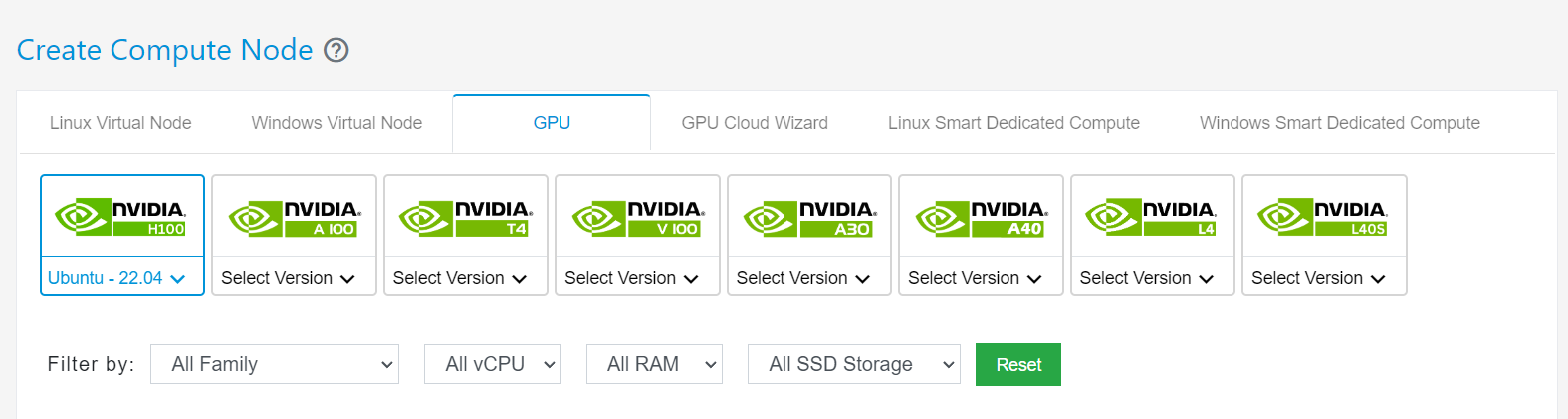

E2E Networks provides an array of advanced Cloud GPUs that are well-suited for high-performance AI workloads.

For the purpose of this blog, we used a V100 node that has 32GB GPU memory, which is enough to load the CodeLlama model. However, for lower latency and higher performance, you should consider A100 or H100, which will allow you to serve a higher number of developers and also run parallel instances of the LLM on the same machine, thereby increasing scalability.

Let’s start.

Step 1 - Deploying Coding Assistant LLM

The simplest and fastest way to serve any LLM model is through Ollama. So, we’ll be using Ollama to serve the model.

Install it by running this in your terminal:

curl -fsSL https://ollama.com/install.sh | sh

Launch the Ollama server on 0.0.0.0 so that it can be accessed by external ips.

OLLAMA_HOST=0.0.0.0 ollama serve

The server will be hosted on the default port 11434. Now pull CodeLlama into your local server.

curl http://localhost:11434/api/pull -d '{

"name": "codellama:7b"

}'

You can verify if the server is up and running by sending the following request.

curl http://localhost:11434/api/generate -d '{

"model": "codellama:7b",

"prompt": "Give me python code for calculating fibonacci series?",

"stream": false

}'

Step 2 - Integration with VSCode

To integrate this API endpoint into your VSCode IDE to enable a coding assistant interface, install the Continue extension.

Once installed, open it and click on the + sign and select the provider as Ollama.

After that open the config.json file and add the following to the model's list.

{

"title": "Ollama",

"model": "codellama:7b",

"completionOptions": {},

"apiBase": "http://:11434/",

"provider": "ollama"

}

That’s it. Now you can start asking questions to your assistant. Below is a screenshot of our integration:

Dealing with Concurrent Requests

Assume there are 100 employees in your company and, at any point in time, there are 10 concurrent requests made by developers. It would be prudent to launch 10 different instances of Ollama on the GPU node so that workload computation can be distributed effectively.

Each group of 10 employees could have access to each instance. That way the load would be effectively distributed and balanced.

Conclusion

Many of our customers are currently running their own open-source-powered coding assistant setups for their dev teams. This is helping them retain control over their R&D stack, their IP, as well as helping their developers become more efficient and productive. To learn more, reach out to sales@e2enetworks.com.